Text Preprocessing for Data Scientists

Text Preprocessing

Text preprocessing is an important task and critical step in text analysis and Natural language processing (NLP). It transforms the text into a form that is predictable and analyzable so that machine learning algorithms can perform better. This is a handy text preprocessing guide and it is a continuation of my previous blog on Text Mining. In this blog, I have used twitter dataset from Kaggle.

There are different ways to preprocess the text. Here are some of the common approaches that you should know about and I will try to highlight the importance of each.

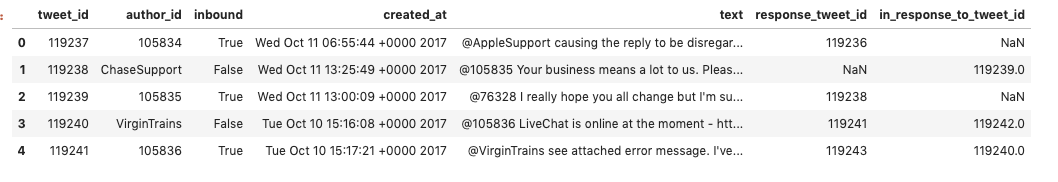

Code#Importing necessary librariesimport numpy as np import pandas as pd import re import nltk import spacy import string# Reading the datasetdf = pd.read_csv("sample.csv") df.head()

Output

Lower Casing

It is the most common and simplest text preprocessing technique. Applicable to most text mining and NLP problems. The main goal is to convert the text into the lower casing so that ‘apple’, ‘Apple’ and ‘APPLE’ are treated the same way.

Code# Lower Casing --> creating new column called text_lowerdf['text_lower'] = df['text'].str.lower() df['text_lower'].head()

Output0 @applesupport causing the reply to be disregar... 1 @105835 your business means a lot to us. pleas... 2 @76328 i really hope you all change but i'm su... 3 @105836 livechat is online at the moment - htt... 4 @virgintrains see attached error message. i've... Name: text_lower, dtype: object

Removal of Punctuations

Code#removing punctuation, creating a new column called 'text_punct]'df['text_punct'] = df['text'].str.replace('[^\w\s]','') df['text_punct'].head()

Output0 applesupport causing the reply to be disregard... 1 105835 your business means a lot to us please ... 2 76328 I really hope you all change but im sure... 3 105836 LiveChat is online at the moment https... 4 virginTrains see attached error message Ive tr... Name: text_punct, dtype: object

Stop-word removal

Stop words are a set of commonly used words in a language. Examples of stop words in English are “a”, “we”, “the”, “is”, “are” and etc. The idea behind using stop words is that, by removing low information words from text, we can focus on the important words instead. We can either create a custom list of stopwords ourselves (based on use case) or we can use predefined libraries.

Code#Importing stopwords from nltk libraryfrom nltk.corpus import stopwords STOPWORDS = set(stopwords.words('english'))# Function to remove the stopwordsdef stopwords(text): return " ".join([word for word in str(text).split() if word not in STOPWORDS])# Applying the stopwords to 'text_punct' and store into 'text_stop'df["text_stop"] = df["text_punct"].apply(stopwords) df["text_stop"].head()

Output0 appleSupport causing reply disregarded tapped ... 1 105835 your business means lot us please DM na... 2 76328 I really hope change Im sure wont becaus... 3 105836 LiveChat online moment httpstcoSY94VtU8... 4 virgintrains see attached error message Ive tr... Name: text_stop, dtype: object

Common word removal

We can also remove commonly occurring words from our text data First, let’s check the 10 most frequently occurring words in our text data.

Code# Checking the first 10 most frequent wordsfrom collections import Counter cnt = Counter() for text in df["text_stop"].values: for word in text.split(): cnt[word] += 1 cnt.most_common(10)

Output[('I', 34), ('us', 25), ('DM', 19), ('help', 17), ('httpstcoGDrqU22YpT', 12), ('AppleSupport', 11), ('Thanks', 11), ('phone', 9), ('Ive', 8), ('Hi', 8)]

Now, we can remove the frequent words in the given corpus. This can be taken care automatically if we use tf-idf

Code# Removing the frequent wordsfreq = set([w for (w, wc) in cnt.most_common(10)])# function to remove the frequent wordsdef freqwords(text): return " ".join([word for word in str(text).split() if word not in freq])# Passing the function freqwordsdf["text_common"] = df["text_stop"].apply(freqwords) df["text_common"].head()

Output0 causing reply disregarded tapped notification ... 1 105835 Your business means lot please name zip... 2 76328 really hope change Im sure wont because ... 3 105836 LiveChat online moment httpstcoSY94VtU8... 4 virgintrains see attached error message tried ... Name: text_common, dtype: object

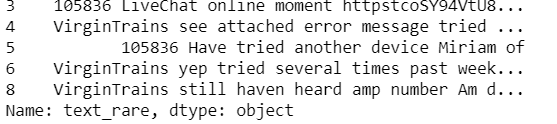

Rare word removal

This is very intuitive, as some of the words that are very unique in nature like names, brands, product names, and some of the noise characters, such as HTML leftouts, also need to be removed for different NLP tasks. We also use a length of the words as a criteria for removing words with very a short length or a very long length

Code# Removal of 10 rare words and store into new column called 'text_rare' freq = pd.Series(' '.join(df['text_common']).split()).value_counts()[-10:] # 10 rare words freq = list(freq.index) df['text_rare'] = df['text_common'].apply(lambda x: " ".join(x for x in x.split() if x not in freq)) df['text_rare'].head()

Output0 causing reply disregarded tapped notification ... 1 105835 Your business means lot please name zip... 2 76328 really hope change Im sure wont because ... 3 105836 liveChat online moment httpstcoSY94VtU8... 4 virgintrains see attached error message tried ... Name: text_rare, dtype: object

Spelling Correction

Social media data always messy data and it has spelling mistakes. Hence, spelling correction is a useful pre-processing step because this will help us to avoid multiple words. Example, “text” and “txt” will be treated as different words even if they are used in the same sense. This can be done by textblob library

Code# Spell check using text blob for the first 5 recordsfrom textblob import TextBlob df['text_rare'][:5].apply(lambda x: str(TextBlob(x).correct()))

Output

Emoji removal

Emojis are part of our life. Social media text has a lot of emojis. We need to remove the same in our text analysis

Code

Code reference: Github# Function to remove emoji.def emoji(string): emoji_pattern = re.compile("[" u"\U0001F600-\U0001F64F" # emoticons u"\U0001F300-\U0001F5FF" # symbols & pictographs u"\U0001F680-\U0001F6FF" # transport & map symbols u"\U0001F1E0-\U0001F1FF" # flags (iOS) u"\U00002702-\U000027B0" u"\U000024C2-\U0001F251" "]+", flags=re.UNICODE) return emoji_pattern.sub(r'', string)emoji("Hi, I am Emoji 😜") #passing the emoji function to 'text_rare'df['text_rare'] = df['text_rare'].apply(remove_emoji)

Output'Hi, I am Emoji '

Emoticons removal

In previous steps, we have removed emoji. Now, going to remove emoticons. What is the difference between emoji and emoticons? 🙂 is an emoticon and 😜 → emoji.

Using emot library. Please refer more about emot

Codefrom emot.emo_unicode import UNICODE_EMO, EMOTICONS# Function for removing emoticonsdef remove_emoticons(text): emoticon_pattern = re.compile(u'(' + u'|'.join(k for k in EMOTICONS) + u')') return emoticon_pattern.sub(r'', text)remove_emoticons("Hello :-)") # applying remove_emoticons to 'text_rare'df['text_rare'] = df['text_rare'].apply(remove_emoticons)

Output'Hello '

Converting Emoji and Emoticons to words

In sentiment analysis, emojis and emoticons express an emotion. Hence, removing them might not be a good solution.

Codefrom emot.emo_unicode import UNICODE_EMO, EMOTICONS# Converting emojis to wordsdef convert_emojis(text): for emot in UNICODE_EMO: text = text.replace(emot, "_".join(UNICODE_EMO[emot].replace(",","").replace(":","").split())) return text# Converting emoticons to words def convert_emoticons(text): for emot in EMOTICONS: text = re.sub(u'('+emot+')', "_".join(EMOTICONS[emot].replace(",","").split()), text) return text# Exampletext = "Hello :-) :-)" convert_emoticons(text)text1 = "Hilarious 😂" convert_emojis(text1)# Passing both functions to 'text_rare'df['text_rare'] = df['text_rare'].apply(convert_emoticons) df['text_rare'] = df['text_rare'].apply(convert_emojis)

Output'Hello happy smiley face happy smiley face:-)' 'Hilarious face_with_tears_of_joy'

Removal of URL’s

Removing URLs in the text. We can use Beautiful soup library

Code# Function for url'sdef remove_urls(text): url_pattern = re.compile(r'https?://\S+|www\.\S+') return url_pattern.sub(r'', text)# Examplestext = "This is my website, https://www.abc.com" remove_urls(text)#Passing the function to 'text_rare'df['text_rare'] = df['text_rare'].apply(remove_urls)

Output'This is my website, '

Removal of HTML tags

Another common preprocessing technique is removing HTML tags. HTML tags usually presented in scraping data.

Codefrom bs4 import BeautifulSoup#Function for removing htmldef html(text): return BeautifulSoup(text, "lxml").text # Examplestext = """<div> <h1> This</h1> <p> is</p> <a href="https://www.abc.com/"> ABCD</a> </div> """ print(html(text)) # Passing the function to 'text_rare'df['text_rare'] = df['text_rare'].apply(html)

OutputThis is ABCD

Tokenization

Tokenization refers to dividing the text into a sequence of words or sentences.

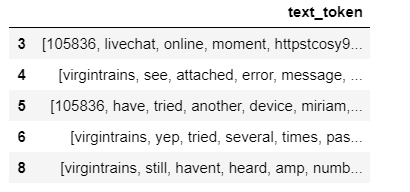

Code#Creating function for tokenizationdef tokenization(text): text = re.split('\W+', text) return text # Passing the function to 'text_rare' and store into'text_token'df['text_token'] = df['text_rare'].apply(lambda x: tokenization(x.lower())) df[['text_token']].head()

Output

Stemming and Lemmatization

Lemmatization is the process of converting a word to its base form. The difference between stemming and lemmatization is, lemmatization considers the context and converts the word to its meaningful base form, whereas stemming just removes the last few characters, often leading to incorrect meanings and spelling errors. Here, lemmatization only performed. We need to provide the POS tag of the word along with the word for lemmatizer in NLTK. Depending on the POS, the lemmatizer may return different results.

Codefrom nltk.corpus import wordnet from nltk.stem import WordNetLemmatizerlemmatizer = WordNetLemmatizer() wordnet_map = {"N":wordnet.NOUN, "V":wordnet.VERB, "J":wordnet.ADJ, "R":wordnet.ADV} # Pos tag, used Noun, Verb, Adjective and Adverb# Function for lemmatization using POS tagdef lemmatize_words(text): pos_tagged_text = nltk.pos_tag(text.split()) return " ".join([lemmatizer.lemmatize(word, wordnet_map.get(pos[0], wordnet.NOUN)) for word, pos in pos_tagged_text])# Passing the function to 'text_rare' and store in 'text_lemma'df["text_lemma"] = df["text_rare"].apply(lemmatize_words)

Output

The above methods are common text preprocessing steps.

Thanks for reading. Keep learning and stay tuned for more!